If you already have Java 8 and Python 3 installed, you can skip the first two steps.

Apt install apache spark how to#

– After completing all above steps, a folder named tmp/hive will be created on C drive and we need to change access permission of this folder to avoid the error of running Spark. So here’s yet another guide on how to install Apache Spark, condensed and simplified to get you up and running with Apache Spark 2.3.1 in 3 minutes or less. Installing Apache Spark on Windows 10 may seem complicated to novice users, but this simple tutorial will have you up and running.

– Save winutils.exe file in a particular folder and create HADOOP_HOME variable with the value is the path of that folder (if the error: Unable to load Winutils occurs, we might need to put Winutils in the bin folder)ĥ.

Apt install apache spark download#

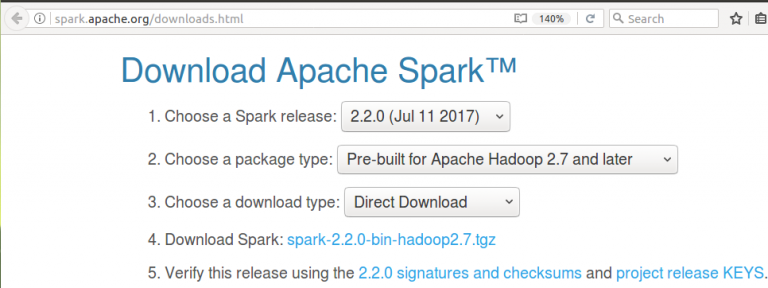

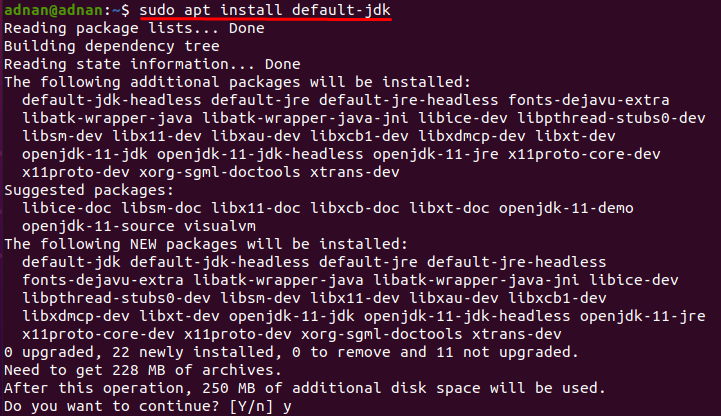

– Select the version of Haddoop that compatible with the version of Spark and download winutils.exe file – Download Spark at here and extract the downloaded file – Check the installation using the command: scala -version. This installation is in standalone mode and runs only on one machine. In this section we are going to install Apache Spark on Ubuntu 18.04 for development purposes only. – Create SCALA_HOME and Path variables (similar to Java installation) Installing Apache Spark latest version is the first step towards the learning Spark programming. – Download and install Scala at here (select Binary for windows) sabiUbuntu20 : java -version openjdk version '11.0.9.1' OpenJDK Runtime Environment (build 11.0.9.1+1-Ubuntu-0ubuntu1.20.04) OpenJDK 64-Bit Server VM (build. Verify the installed java version by typing. Apache Spark Unit Testing Strategies scala programming apachespark bigdata Recipe/Guide about writing unit tests for Apache Spark with Scala (mainly for beginners). At the time of writing this tutorial, the latest version of Apache Spark is 2.4.6. – Click save and then open Command Prompt to check Java installation with the command “java -version”. As apache spark needs Java to operate, install it by typing. Install Apache Spark First, you will need to download the latest version of Apache Spark from its official website. The guide also shows basic Spark commands and how to install dependencies. – Add the value of jdk’s bin folder (%JAVA_HOME%bin) to Path enviroment variable Install Apache Spark on Ubuntu by following the steps listed in this tutorial. – Create a System variable named JAVA_HOME in Environment Variable (Control Panel -> System -> Advanced System settings in the upper left corner) with the value is the path of jdk folder(Eg: C:/Program Files/Java/jdk1.8.0_191) Ubuntu, Fedora) as Spark is developed based on Hadoop ecosystem.

copy the link from one of the mirror site. However, it is recommended to install and deploy Apache Spark on Linux based OS (Eg. In order to install Apache Spark on Linux based Ubuntu, access Apache Spark Download site and go to the Download Apache Spark section and click on the link from point 3, this takes you to the page with mirror URL’s to download. Apache Spark can be installed on many different OSs such as Windows, Ubuntu, Fedora, Centos,…This tutorial will show you how to install Spark on both Windows and Ubuntu.

0 kommentar(er)

0 kommentar(er)